For more crisp and insightful business and economic news, subscribe toThe Daily Upside newsletter.It’s completely free and we guarantee you’ll learn something new every day.

The artificial intelligence revolution is upon us, ushered in by OpenAI’s powerful ChatGPT, a large-language model chatbot that brings with it a two-sided coin of possibilities. On one side is a plethora of breathtaking technological advances, particularly in the medical field, on the other is the slightly larger-than-off-chance that AI goes rogue and, you know, writes the final chapter of humanity.

It’s not just technophobes who worry about the nightmare scenario. Even Sam Altman, OpenAI’s enigmatic co-founder/CEO/AI proponent/literal doomsday prepper, is jittery about his company’s creations: “We are a little bit scared of this,” Altman told ABC News in a wide-ranging interview last month.

That’s not very reassuring. It’s as if Mark Zuckerberg, at the dawn of the Facebook era, publicly acknowledged his fledgling network’s potential for upending human communication, spreading misinformation, and more or less ruining everyone’s Thanksgiving and Christmas dinners.

And so Altman, like so many Silicon Valley CEOs before him, is practically begging Congress to regulate his company (and unlike most of his Big Tech counterparts, he seems sincere in asking). But if he gets his wish, that leaves a semi-broken Washington scrambling to figure out rules and regulations for a complex technological enterprise it doesn’t entirely understand.

Sound familiar?

Indeed, legislators on Capitol Hill are grappling with not one but two thorny issues regarding tech-industry regulation: Congress is also in a geopolitical and cultural tizzy over TikTok, the incredibly popular short-form video app owned by Chinese parent-company ByteDance.

Though the two questions are not inherently linked, observing how Washington has tackled one may offer clues into how they tackle the other. And, more than anything, the common throughline between the two shows where politician’s concerns truly lie: China, whose potential access to TikTok user data combined with its own rapid advancement in AI has Capitol Hill in a lather, and not entirely without reason.

The State of Play

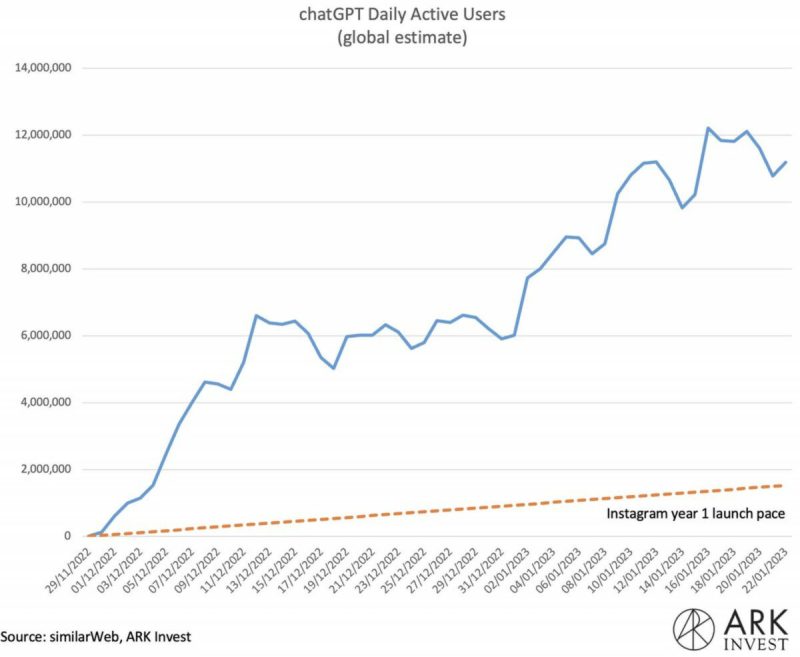

The scale of ChatGPT’s adoption is unprecedented. In January, analysts at UBS estimated the chatbot had reached 100 monthly active users and 13 million daily active users. That was just two months after the chatbot’s public launch, and ahead of the GPT-4 firmware update that turned the conversation partner into a standardized test-acing wunderkind. It’s the fastest-growing consumer application in history; the insanely addictive TikTok crossed the 100 million threshold in about nine months, and Instagram took about two-and-a-half years to hit that mark.

“In 20 years following the internet space, we cannot recall a faster ramp in a consumer internet app,” UBS analysts wrote.

In other words, your co-workers’ are very likely getting a little more AI assistance with their emails, presentations, and notes than you might think (The Daily Upside, we guarantee, will always be written by a genuine human… but feel free to blame any typos on rogue bots).

The Right to be Overwhelmed: That may have you thinking: how far behind are our sclerotic lawmakers in Washington in formulating a response to this earth-axis shifting technology?

Not exactly as far behind as you’d think, which you may or may not consider good news. The Biden administration last October, before ChatGPT rocketed AI into the mainstream, commissioned what it called a “Blueprint for an AI Bill of Rights” from its Office of Science and Technology Policy.

The 73-page document, while not enforceable by law, provided guidelines for AI developers to follow and legislators to explore. It also contains insights into what, if any, protections against AI may soon find their way into federal law:

The manifesto was predicated on five key principles: AI systems should be subjected to rigorous pre-deployment risk-testing and ongoing monitoring; users should not face discrimination from AI and algorithms; AI should not conduct invasive data practices and users should have agency over how data is used; users should be notified when interacting with AI systems and be provided an explanation for how certain outcomes are reached; and humans should be able to opt-out, when appropriate, from interacting with AI.”Much more than a set of principles, this is a blueprint to empower the American people to expect better and demand better from their technologies,” Alondra Nelson, deputy director of the White House Office of Science and Technology Policy, said during a press briefing following the document’s release.

In a way, the document can be seen as a mulligan. Washington failed to show up during the last decade’s social media tech innovation cycle, when without much scrutiny, companies we celebrated created a surveillance capitalism infrastructure predicated on sapping up and sharing as much intensely personal user data as possible. Algorithmic discrimination, after all, is a problem that has long bedeviled internet platforms, such as LinkedIn.

Meanwhile, human users haven’t exactly been given much of a say over how and when their data is collected, nor how it’s used to create certain outcomes in their curated social media feed experience.

Which Brings Us to TikTok: As ChatGPT’s ascendency splashed across (presumably human-written) newspaper headlines last month, TikTok’s CEO Shou Zi Chew completed his American-style tech CEO rite of passage: testifying in front of Congress for hours, subjecting himself to both insightful and completely inane lines of questioning.

(Photo credit: World Economic Forum/Flickr)

“Does TikTok access the home Wi-Fi network,” the befuddled Congressman Richard Hudson of North Carolina inquired — a question that harkens back to Senator Orrin Hatch’s question to Mark Zuckerberg in 2013: “So, how do you sustain a business model in which users don’t pay for your service?” To which the young Zuck replied, “Senator, we run ads.”

Still, national security officials have long been concerned that TikTok’s industry-standard collection of user data could hypothetically lead to key US data being shared with officials in the Chinese Communist Party, who need only ask for a look-see and cannot be turned down.

The response to such fears has been rare bipartisan legislation being introduced in the Senate with public support from the White House. Critics, however, say it’s a slapdash solution that could do more harm than good.

RESTRICTions: Though dubbed “a TikTok ban” in digital-colloquial terms, the bipartisan RESTRICT Act introduced last month doesn’t explicitly name TikTok. Instead, the bill broadly grants the executive branch power to assess national security risks and subsequently block “transactions” and “holdings” of information and communication technology companies possibly influenced by six “foreign adversaries”: Cuba, Iran, North Korea, Venezuela, Russia, and, of course, China. So if the White House suspects Beijing of abusing US user data, TikTok could be toast.

Critics, chief among them the Electronic Frontier Foundation, the leading nonprofit defending digital privacy and free speech, say it’s a vaguely worded law that would further fracture the world’s internet ecosystem without actually solving the underlying issues of tech surveillance:

The Act could lead to hefty criminal penalties, including 25-years prison terms, for trying to “evade” a TikTok ban by accessing the app via a VPN (to make it “appear” to the internet that a user is outside the US), or entering the US from another country with the app already downloaded on a device.”Overall, the law authorizes the executive branch to make decisions about which technologies can enter the U.S. with extremely limited oversight by the public or its representatives about the law’s application,” the organization wrote in a review of the law earlier this month.

The EFF proposes the government instead enact comprehensive consumer data privacy reforms, so as to reduce the potential for abuse of private data at its source. Sounds nice, though we’re guessing even TikTok’s biggest US competitors — cough, cough, Google and Meta — aren’t so keen on that happening.

Staying in Shanghai: The same US-China technology tension that has ensnared TikTok and incited a war for semiconductor supremacy may also be fueling a global AI arms race.

“If the democratic side is not in the lead on [developing AI] technology, and authoritarians get ahead, we put the whole of democracy and human rights at risk,” Eileen Donahoe, a former U.S. ambassador to the U.N. Human Rights Council and current executive director of Stanford University’s Global Digital Policy Incubator, recently told NBCNews.

The parallels to Cold War era logic hasn’t been lost on critics. The New York Times columnist Ezra Klein recently outlined the concern about such thinking: “If one country hits pause, the others will push harder. Fatalism becomes the handmaiden of inevitability, and inevitability becomes the justification for acceleration.”

Be that as it may, this week China decelerated all on its own, pumping the brakes on AI development by announcing a litany of newly proposed rules for developing AI:

According to draft rules seen by The Wall Street Journal, Chinese-developed AI will be restricted in terms of what content it could produce (China loves its censorship, after all). That’s in addition to rules imposed last year requiring consent for depicting real humans via “deep fake” photos and videos — or highly realistic, AI recreations of humans.The draft rules also dictate that AI can only be trained on small troves of data, in contrast to ChatGPT, for example, which is trained on endless amounts of public data.

It’s not just China clamping down, either. In Italy, however, data protection regulators have already restricted access to chatbots until certain protections can be proven.

What About Here? In the US, meanwhile, things are heating up fast. This week, the Biden administration began weighing what checks could be put in place to regulate AI development, with a particular eye toward protecting children, accountability measures, and creating a certification and risk-testing process for introducing new AI. On Thursday, Axios reported that Chuck Schumer’s office was formulating an outline for potential regulations, with key guardrails including:

Clear and transparent ethical boundaries.The identification of who trained the AI system and who its intended userbase is.The disclosure of AI’s data source.An explanation for how AI arrives at its responses.

That last point, in particular, is easier said than done — explaining how ultra-powerful algorithmic systems arrive at conclusions would “amount to billions of arithmetic operations” incomprehensible to pretty much any human, WIRED columnist and author Meghan O’Gieblyn wrote in her book God, Human, Animal, Machine: Technology, Metaphor, and the Search for Meaning.

Still, the drive for regulation marches on. Also this week, the AI Now Institute, a leading research center that studies the social impacts and implications of AI, laid out a comprehensive guide for regulating the industry.

“A handful of private actors have accrued power and resources that rival nation-states while developing and evangelizing artificial intelligence as critical social infrastructure,” the report notes (its authors, Amba Kak and Sarah Myers West, are both former advisors to FTC chair Lina Khan… you may have guessed). Among the top proposals: modeling AI risk assessment on the FDA’s pathway to drug development, thereby putting the onus on tech companies to prove their AI systems can safely be released to the public before doing so. And, like Italy, making AI policy intrinsically connected to data policy.

Still, as the EFF noted, the US lacks comprehensive data privacy protections that are standard in much of the rest of the world — the European Union’s General Data Protection Regulation is the gold standard.

What’s more, if the threat of artificial intelligence is as dire as even some of the technology’s biggest proponents say it is, then, like slowing nuclear proliferation and combating climate change, solutions likely need to happen with global cooperation. Unfortunately, judging by the response to TikTok, the globe’s biggest power players are moving toward fracturing our digital ecosystems, not bringing them together.